RECAST: A framework for reinterpreting physics searches at the LHC

On 22 of December 2015 a SpaceX rocket launched towards space delivering satellites to low Earth orbit and then, instead of being lost to the depths of the ocean, the rocket’s first stage -- the most expensive component of a rocket -- returned back to earth landing at Cape Canaveral. It was the first time ever such a landing has been achieved, heralding the start of a new era of reusable rockets and more affordable access to space. Since then, the process has been streamlined and rocket landing and reuse has almost become routine. In high energy physics, the ATLAS experiment is pursuing an analogous program, RECAST, in order to make some of its most precious assets -- the data analysis pipelines -- reusable and easily deployable. The program will allow to analyze the ATLAS experiment’s dataset more comprehensively and to study previously uncovered physics models.

At the core of the scientific method lies the interplay between theory and experiment: the formulation of a hypothesis and the testing of said hypothesis through experimentation. Here, high energy physics finds itself in a peculiar situation: After the discovery of the Higgs boson in 2012, the Standard Model of Particle Physics is now completed. However, despite its many successes the Standard Model cannot account for many phenomena we observe, such as the existence of Dark Matter, the matter-antimatter asymmetry or the origin of neutrino masses to just name a few. Over the last decades, many new theories have been proposed to explain these phenomena, but they can often only be tested using the data of the few experiments of the Large Hadron Collider.

Figure 1: Sketch of an analysis. For a given corner of the dataset, the Standard Model backgrounds are estimated (blue areas) as well as a possible contribution from new physics phenomena. Here, the data (black markers) is sufficiently well described by the Standard Model contributions, and thus the new physics hypothesis is ruled out.

Testing a theory involves a careful measurement of the collisions in a particular corner of the dataset. The analysis teams must calculate precisely how many events would be expected from background Standard Model processes in that “corner” and similarly how many events one would expect from the particular theory of new physics one is interested in. With these calculations in hand, analysts can look at the actual observed data and perform a statistical analysis in order to decide whether the particular theory is favored by the data or rather excluded in favor of the Standard Model (see Figure 1). In the end such an analysis is defined by a complex software-based analysis pipeline. Most of the work in developing such a pipeline is in carving out the corner in the dataset that holds the most information about the studied theory as well as making the precise Standard Model calculations: if you want to discover new physics, you first need to understand your backgrounds!

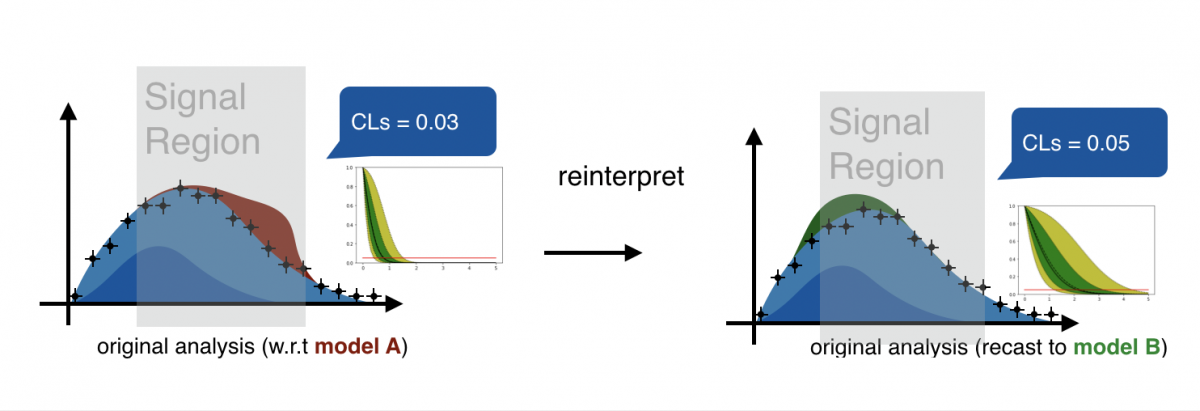

Given the effort needed for creating such a pipeline from scratch to study a given theory in detail, the first question one should ask before considering a new theory is: “is it already excluded by what we know from past experiments?” This is especially important given the large range of possible theories of new physics. Here one can employ a powerful method: reinterpretation.

Reinterpretation exploits the fact that a theory’s effects can materialize in a corner of the dataset that was already analyzed with respect to a different theory. If this is the case, most of the work is already done: the data slice and the code to analyze are well-defined, even the Standard Model backgrounds are already estimated! The only remaining task is to calculate estimate the effect of the new theory and perform the statistical analysis to decide whether it’s viable in the face of the observed data or not (see Figure 2).

Software Preservation - Workflows and the Container Revolution

This is where software preservation comes in: to calculate the expected effects of the new theory in the data region studied by an existing analysis, the analysis pipeline must be re-activated to make the necessary calculations on the new input theory. That means the software making up the pipeline must be preserved in a reusable way -- much like the rocket boosters are recovered to be reused later.

In order to preserve software for later (re-)use, it’s not enough to simply archive its code. Almost all scientific software builds on top of other software, so in order to have functional code one not only has to preserve the code itself but also all of its dependencies. This might sound daunting, but with the rise of cloud computing new possibilities are emerging: The concept of Linux Containers, popularized through the open source project Docker defines an easily shareable, executable and self-contained format to package and archive entire software environments. This technology is increasingly used not only in cloud computing but also in software-heavy science domains such as bioinformatics and high-energy physics. Thanks to centrally supported infrastructure developed within the ATLAS experiment and CERN, analysis teams can now easily preserve their analysis code in this format, such that it can be used for reinterpretation purposes.

However, software preservation alone is not enough: one also has to also preserve the knowledge of exactly how to use it in order to be able to extract new science out of the old code. Here, workflow languages, such as CWL, yadage and others, help describe not only the how to achieve individual tasks, such as selecting interesting events, using the preserved software, but also provide -- just like a recipe -- the exact ordering in which the various tasks of an analysis must be carried out.

RECAST

The RECAST (Request Efficiency Computation for Alternative Signal Theories) project combines the scientific motivation for a rich reinterpretation programme at the LHC with the technical capabilities afforded by workflow languages and preservable software environments. The major search groups within the ATLAS collaboration now require new analyses to be preserved using these new tools such that when a new model of physics is proposed by theorists, the collaboration can re-use these archived analyses to derive a first assessment through reinterpretation. The preserved analyses are also expected to be used in a wave of summary studies planned once the data analyses of the second run of the LHC are finalized. For example, a global analysis of a large class of supersymmetric models, referred to as the phenomenological MSSM, allows a detailed assessment of the state of supersymmetry beyond the narrower scope of individual models.

Hunting the Dark Higgs

In Summer 2019, the first fruits of this new type of analysis preservation have been reaped. A group of theorists had proposed a novel way through which dark matter could be produced together, or in association with, a new type of Higgs boson, the “dark Higgs”. The authors note that this new production mode is similar to the association production of dark matter and the Standard Model Higgs boson. In both cases the experimental signature consists of high energy jets from the decay products of the (dark) Higgs and missing energy carried away by the dark matter particles that leave no trace in the detector.

Figure 3: Reinterpretation in Action. Left: The result of the original analysis. The observed data is shown as black markers, while the filled colored histograms are Standard Model backgrounds. The original signal is shown as a dashed histogram. Right: The reinterpreted analysis. Data and Backgrounds remain unchanged, but a new signal component is now being considered.

While there was no dedicated search yet for this new model, the more standard case of associated dark matter production with a Standard Model Higgs had indeed already been searched for in the ATLAS data. The existing analysis was designed in a general enough way to also be sensitive to the dark-Higgs scenario and the analysis pipeline had been archived with the new cloud-computing technologies. Thus a reinterpretation of the existing analysis in the context of the dark-Higgs scenario was possible and even though the analysis was not optimized for the dark-Higgs scenario, the data slice studied by the analysis was informative enough to rule out a range of dark Higgs mass values as seen in Figure 4.

Figure 4: Mass values for the resonance and dark-higgs mass. The values to the left of the black contour could be ruled out through the reinterpretation of an analysis studying dark matter production in association with a Standard Model Higgs boson.

A new bridge between Theory and Experiment

The first result obtained through RECAST is hopefully only the beginning of a new avenue through which experimentalists and theorists can exchange their findings. As with the first landed booster of SpaceX, this first attempt produced a lot of insights not only about the physics at hand but, crucially, also about the process of publishing reinterpretation results in general. As we gain more experience, we hope to streamline the process, so that publishing RECAST results becomes as routine as booster landings have become.

Further Reading

-ATLAS collaboration (2014). ATLAS Data Access Policy. CERN Open Data Portal. DOI: 10.7483/OPENDATA.ATLAS.T9YR.Y7MZ

-ALICE collaboration (2013). ALICE data preservation strategy. CERN Open Data Portal. DOI: 10.7483/OPENDATA.ALICE.54NE.X2EA

-CMS collaboration (2012). CMS data preservation, re-use and open access policy. CERN Open Data Portal. DOI: 10.7483/OPENDATA.CMS.UDBF.JKR9

-LHCb collaboration (2013). LHCb External Data Access Policy. CERN Open Data Portal. DOI: 10.7483/OPENDATA.LHCb.HKJW.TWSZ

-Kraml, S., Sekmennnn., S., “Searches for New Physics Les Houches Recommendations for the Presentation of LHC Results”, Proceedings of Les Houches PhysTev 2011 workshop:

-Cranmer K and Yavin I 2011 Journal of High Energy Physics 2011 38 ISSN 1029-8479 URL http://dx.doi.org/10.1007/JHEP04(2011)038

https://indico.cern.ch/event/173341/attachments/218701/306311/LesHouches-LHC-Recommendations.pdf

-Chen, X., et al. "Open is not enough", Nature Physics 15, 113-119 (2019): https://www.nature.com/articles/s41567-018-0342-2

-Maguire E, Heinrich L and Watt G 2017 22nd International Conference on Computing in High Energy and Nuclear Physics (CHEP 2016) San Francisco, CA, October 14-16, 2016 (Preprint 1704.05473) URL https://inspirehep.net/record/1592380/files/arXiv:1704.05473.pdf

-Cranmer, K. and Heinrich, L. on behalf of the ATLAS Collaboration, "Analysis Preservtation and Systematic Reinterpetation within the ATLAS Experiment", Journal of Physics: Conference Series 1085 (2018), IOP Publishing, London. https://iopscience.iop.org/article/10.1088/1742-6596/1085/4/042011/pdf